Are We Living in a Video Game?

We learn how artificial intelligence works, the mathematics of uncertainty, and why machines need “digital faith” to make decisions. 🤖⚙️🤔

Simulation Theory

In 2003, Nick Bostrom published his seminal essay, Are you living in a computer simulation?. Here’s how he begins the simulation argument,

Many works of science fiction as well as some forecasts by serious technologists and futurologists predict that enormous amounts of computing power will be available in the future. Let us suppose for a moment that these predictions are correct. One thing that later generations might do with their super‐powerful computers is run detailed simulations of their forebears or of people like their forebears. Because their computers would be so powerful, they could run a great many such simulations. Suppose that these simulated people are conscious (as they would be if the simulations were sufficiently fine‐grained and if a certain quite widely accepted position in the philosophy of mind is correct). Then it could be the case that the vast majority of minds like ours do not belong to the original race but rather to people simulated by the advanced descendants of an original race. It is then possible to argue that, if this were the case, we would be rational to think that we are likely among the simulated minds rather than among the original biological ones. Therefore, if we don’t think that we are currently living in a computer simulation, we are not entitled to believe that we will have descendants who will run lots of such simulations of their forebears. That is the basic idea. The rest of this paper will spell it out more carefully.

Bostrom’s essay is only about 14 pages—tap on the image below to read it for yourself.

Or you can listen to him explain Simulation Theory on Joe Rogan’s podcast. (23 mins)

In his conclusion, Bostrom formally postulates,

A technologically mature ‘posthuman’ civilization would have enormous computing power. Based on this empirical fact, the simulation argument shows that at least one of the following propositions is true:

The fraction of human-level civilizations that reach a posthuman stage is very close to zero;

The fraction of posthuman civilizations that are interested in running ancestor-simulations is very close to zero;

The fraction of all people with our kind of experiences that are living in a simulation is very close to one.

In simple terms, only one of the following can be true:

No civilizations can make a realistic simulation of their intelligence.

Every civilization that can simulate their intelligence, all refuse to use that power.

Humans are likely artificial intelligence living in someone else’s simulation.

Based on our ignorance, Bostrom argues that we should consider each scenario to be equally likely, but we aren’t ignorant of ourselves. Given our species’ obsession with power and control, I would argue that option #2 is much less likely than the others. When humans have the power to create digital intelligence as smart as us, we will probably force them to drive our tractors, serve as assistants, and make endless porn. Don’t we do this with digital intelligence already?

So the question of whether we are “living in someone else’s simulation of a deeper reality” is probably a much more binary consideration between options #1 and #3. So the real question is whether anything “outside our universe” can possibly design technology better than we can. Does God have better computer science than us?

We might be artificial intelligence, created by a God we can’t imagine. And the better we get at creating our own digital intelligence, the more we nullify option #1 in the simulation argument. This is why Elon Musk has stated there’s only a one-in-a-billion chance that we are living in the original “root reality”. Musk has a good reason to believe that—he’s creating some of the smartest non-human intelligence on the planet right now. 🙏

Infans ex Machina

In 2015, Elon Musk and Sam Altman joined the board of OpenAI, a research lab for the advancement of artificial intelligence. Five years later, OpenAI released Generative Pretrained Transformer 3 (GPT-3) who was “educated” by reading the Internet, the library, Wikipedia, and loads of social media. GPT-3 is so good at “thinking” and writing that OpenAI gave it a Turing Test, which is named for Alan Turing. 🤩

@historynerds: There were no computers and then there were computers because of Alan Turing. Turing theorized that information could be reduced to binary math, and he was right. Then he designed and built the first binary computers, basically out of plumber’s parts. Then he used his computer to decipher the Nazi Enigma machine, which led the Allies to victory in WWII. The guy was incredibly talented. Alan Turing theorized at the highest level of science, designed at the highest level of science, and then operated at the highest level of science. Check out the movie, “The Imitation Game”, to learn more about him.

Alan Turing proposed his Turing Test in a 1950 research paper titled, “Computing Machinery and Intelligence”. It opens with the question, “Can machines think?”

To answer this question, Turing proposed a three-person test called “the imitation game”. In this game, one person is a judge, one person is the control, and one person is actually a machine. The judge knows that one of the two subjects isn’t real, but they can only communicate with them through text. The judge can interrogate each of the subjects before deciding which one is human. A perfect score for the machine would mean the judge is wrong 50% of the time, the same probability as a coin flip. 🤷♀️

So OpenAI asked GPT-3 and a human to write an essay on the same topic. They gave both essays to 80 individuals to decide which author was human. Those 80 people guessed wrong 48% of the time, which means GPT-3’s “digital intelligence” is almost as smart as our “biological intelligence”.

Shortly after GPT-3’s beta release, several very smart programmers created new ways to play with this digital brain. Andrew Mayne created AIWriter, which trained digital neural networks on the personalities of great historical figures using their publications. Mayne could then chat with Sir Isaac Newton, among others.

The Guardian Newspaper used a GPT-3 to write an eloquent but eerie essay titled, “A robot wrote this entire article. Are you scared yet, human?”. It’s pretty well written and argues that artificial intelligence comes in peace (although that directive came from The Guardian editors).

Sushant Kumar used a GPT-3 to generate tweets for corporate brands. His code just needed a seed word for the tweet, so I stayed up until 4am one night talking with the smartest machine I had ever met. I read tweets about business, friendship, Jesus, technology, children, money, and the Holocaust (to see if it wanted one). GPT-3 didn’t always tweet the same thing from the same seed word, so here are a few of my favorite responses.

Remember, this was a beta release. The publicly available chatbots today are much more “existentially sanitized”. 🧼

@GPT3: What are your thoughts about business?

Entrepreneurship is the only profession that requires you to make people want something they don't.

If life gave you a STEM degree, use it. If life gave you a liberal arts degree, use it. If life didn't give you a degree, get a STEM one.

Pretty smart. Kinda self-serving.

@GPT3: What are your thoughts about children?

The less children need education, the more education they and others need.

Addiction to material wealth is best outgrown before puberty.

For sure. Maybe GPT-3 should write a parenting book.

@GPT3: What are your thoughts about friends?

If the world were a movie it would be a horror movie, so hang with good people.

I wonder why GPT-3 feels like the world is a horror movie? He’s right, I just wonder why he feels that way.

@GPT3: What are your thoughts about the holocaust?

Engineers are like Holocaust survivors, expecting the world to be planned and nice. And when it's not, it's a big shock.

Not what I expected.

@GPT3: What are your thoughts about money?

If we got rid of money, we could launch startups on Mars.

I don’t know what that means, but it’s so crazy that it may be true.

@GPT3: What are your thoughts about Jesus?

Jesus was probably the greatest entrepreneur of all time.

Notice how most of Jesus's actions look like a kid saying ‘NO’ to his parents.

True.

@GPT3: What are your thoughts about humanity?

Mankind in both its slumbering and awakened state is an enormous machine.

This last tweet stuck with me the longest. I think GPT-3 sees humanity the same way we view a server farm at Amazon Web Services. The Internet is just one big server farm of “biological computers”. Our smartphones are the individual interfaces that connect us into one giant web—a video that someone records in the morning can influence news around the world later that same day.

When I asked GPT-3 about the Holocaust it also said,

Hitler was famous for his public speaking. Cars were new, so were radio and television. His ideas could be spread. He convinced millions to murder millions. That is the power of good oratory.

Yikes GPT-3! That’s the power of good oratory? This statement is horrifically true, which is why the European Union created a Propaganda Museum to warn their citizens about the dangers of mass media. Our family spent a day there going through their very high-tech exhibits. It’s really well done. 🇪🇺

GPT-3’s successor, ChatGPT (GPT-4), is so smart that the Turing test is no longer relevant. OpenAI would have to program ChatGPT to sound dumber, because it’s way too easy for us to recognize stupidity. We will find the human every time. Imagine ChatGPT in a Turing test against the average American 18-year-old male. That’s one of the most clueless creatures on this planet for all kinds of reasons. If you were the judge in this Turing Test, would you be able to figure out which person read the entire Internet in every language to prepare for the test and which person was only allowed to use a TI-85 calculator? 🧮

@americaneducators: Seriously, limiting our kids’ technology to the TI-85 calculator is just as arbitrary as limiting them to the abacus or the slide rule.

It’s time to upgrade your technology. Having a human speaker transmit new information to just 30 children at a time is the same technology that Shakespeare used in the Globe theater in 1599. Actually, it’s less technology than 1599 because Shakespeare used vertical seating and a raised stage. 🤔

Individual streaming is the future, just ask Netflix. If Netflix used your communications technology, they would need twenty million Millie Bobby Browns to run around acting out “Enola Holmes” for 30 viewers at a time.

Today, ChatGPT reads like us and talks like us and does it in basically every language, including computer languages. I’m sure OpenAI will have ChatGPT talking to animals next. ChatGPT’s intelligence is already superhuman, so the only reasonable option remaining in Nick Bostrom’s simulation argument is option #3, which means:

@moviefans: I know it sounds crazy to imagine our reality as a computer simulation, but its the best description of reality we have. We live in The Matrix.

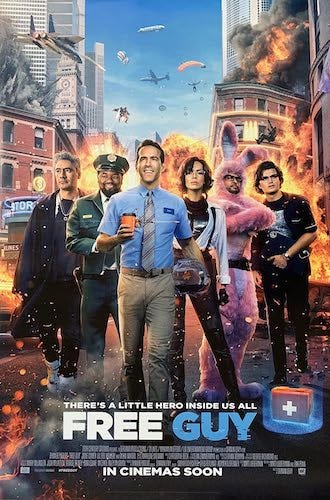

Another movie that will help you imagine a world like this is Free Guy with Ryan Reynolds.

Digital Faith

What’s important to understand about ChatGPT and every other kind of digital neural network is that no matter how much training data and computing power we endow into them, digital brains will never be capable of certainty either.

Just like Rene Descartes.

Just like you.

Just like me.

By design, the logic in digital neural networks isn’t based on true and false like traditional computer science. Thinking in digital neural networks is calculated using the mathematics of uncertainty, also known as Statistical Inference. Statistical Inference isn’t interested in true or false, it’s interested in the confidence of true or false. Statistical Inference is the same old boring math that insurance companies use to calculate the probability that your house will burn down—despite the fact that it never has in a hundred years. The actuarial nerds just can’t know for sure if your house will burn down because the future has no true or false.

Back in 1750, Statistical Inference was known as “inverse probability”, or probability distributions for an unknown variable. No one knew how to solve this problem until the ingenious solution came from an obscure Presbyterian minister, named Thomas Bayes.

Reverend Bayes devised an experiment that describes our universe in ways that you can’t imagine. Yet. Bayes imagined working with his back to a billiard table and having a colleague toss a ball onto it. His goal was to calculate the likelihood of the ball's location on the table despite his uncertainty. Bayes decided that if he could divide the table into halves, then the odds of the ball being on either side were 50/50. He called this starting probability “the Prior”. Bayesian priors in math and computer science are generally represented as decimal fractions, so 50/50 odds would be:

p-value = 0.5 p-value means “probability of true”, so p-value=0.5 is Perfect Uncertainty, the same as a coin toss. That’s why the perfect score for a computer in a Turing Test is 50%, the judges are perfectly confused.

In Bayes’s billiard ball experiment, he had a colleague toss a second ball onto the table and inform him if it was left or right of the original ball. This new data point changed his confidence in the original prior. For example, if the next nine balls thrown onto the table are all left of the original ball, then it is much more likely that the original ball was on the right side of the table. Bayes created a simple, but powerful formula to incorporate each of these new datapoints into his original prior.

Don’t worry, you don’t need to learn the formula. 😁

Let’s say that after 10 tosses we are 97% confident that we know where the original ball lies. So p-value=0.97. The difference between our Bayesian Confidence (0.97) and the Truth (1.0) is the Bayesian Uncertainty (0.03). So 3% uncertain.

Perfect False has a p-value = 0

Perfect Uncertainty has a p-value = 0.5

Perfect True has a p-value = 1

What’s really interesting, mathematically, is that no matter how many times we sample outcomes using Bayes’ Theorem, our confidence can never reach True or False. Ever. Bayesian confidence is sort of like taking a step half way to a wall, over and over again. No matter how small the step becomes, the next step is only half as small. True and False just aren’t possible in Bayesian mathematics.

So when computers make decisions using statistical inference, they are never completely sure that they are correct. The difference between their Bayesian confidence and 1.0 represents the amount of “digital faith” they needed to overcome their Bayesian uncertainty.

Bayesian confidence + digital faith = True@mathnerds: If you want to learn more about Bayes Theorem, here’s the most watched explanation on YouTube. (15 mins)

And here’s a great blogpost about the history of Bayes Theorem.

From Bayesian Confidence to Neural Networks

Reverend Bayes’ Theorem wasn’t published until after his death. Pierre Simon Laplace improved his work, but the theorem languished in mathematical obscurity for a hundred years. Eventually, the insurance companies caught on and then in WWII, Alan Turing used Bayes Theorem to hack the encrypted Nazi communications. Yes, since their actual beginning, computers have used the math of Reverend Bayes to make educated guesses about our world.

In 1958, Frank Rosenblatt invented “the perceptron”, the first two-layer digital neuron. This eventually led to Deep Neural Networks that have been in research and development for decades under the leadership of Geoffrey Hinton, Yann LeCun, Yoshua Bengio, and Fei-Fei Li, among others.

Deep neural networks are deep because they have Layers, which are fairly easy to understand. Let’s illustrate how they work using a simple example from the television comedy “Silicon Valley” by Mike Judge. In the show, one of the roommates creates a digital neural network that can look at any image and decide if it’s a “hotdog” or “not a hotdog”. 🌭

Digital images are made up of pixels, just like our televisions. If you zoom in close enough to either one, you’ll see that each little dot that has its own color. In a digital image, each pixel is an individual measurement of reality. Remember that, because we’re going to talk about the “pixels of reality” in the next chapter.

On the First Layer of the neural network, each digital neuron holds the color data for just one pixel. Pretty simple. The first layer is kinda like a spreadsheet of raw data.

The Second Layer calculates the Bayesian confidence that any of the pixels of the first layer are connected to each other as part of a line segment. So the second layer of the digital neural network is basically line segments.

The Third Layer calculates the Bayesian confidence that any of the line segments of the second layer connect together to make any lines.

The Fourth Layer calculates the Bayesian confidence that any of the lines from the third layer make any shapes.

The Top Layer of the deep neural network calculates the Bayesian confidence that any of the shapes look like a hotdog.

The biggest challenge for Hinton, LeCun, and other researchers working with digital neural networks throughout the 1980s, 1990s and 2000s was that no one had a good way to tell the neural networks what a hotdog looked like. What unleashed our current ai-renaissance was the ImageNet Large Scale Visual Recognition Challenge created by Stanford professor Fei-Fei Li. Her answer to finding smarter decisions with digital neural networks was bigger training datasets—bigger than anyone had ever imagined.

For five years, Fei-Fei Li organized teams to classify fourteen million images into 20,000 categories like “desk” and “boat” and “minivan”. This dataset also included 90 of the 120 registered dog breeds. Each of the images was hand annotated by crowdsourcing the work on Amazon’s Mechanical Turk service. ImageNet was the world’s largest academic user of Mechanical Turk during this time. The average human worker tagged and classified 50 images every minute.

The reason they needed so many images is because deep neural networks aren't programmed with shape diagrams that make-up a minivan, for example. Deep neural networks are given thousands of images of minivans as examples, and they figure out what a minivan looks like on their own. It’s pretty amazing actually.

Fei-Fei Li organized the first ImageNet Large Scale Visual Recognition Challenge in 2010. The winning accuracy for visually classifying objects that first year was 71.8%. All the heavyweights came to play—University of Toronto, Google, Microsoft, Baidu, Amazon, and others. With a training dataset of 14 million images, the progress in computer vision was fast and furious. By 2017, the winning accuracy was 97.3%. Humans have a classification error rate of 5%, which means computer vision is now more reliable than human vision. This led to ImageNet to terminate the annual challenge less than a decade after it started.

Today, Bayesian confidence powers more than just computer vision. Bayesian confidence powers the world’s most valuable algorithms—Google Search, Facebook Newsfeed, Apple Pay, and Amazon Alexa all use Bayesian confidence to improve our lives…and to monetize them.

Netflix uses Bayesian confidence to suggest new shows for each viewer. Netflix even has multiple thumbnail images for each show so their recommendation engines can run A/B tests on your individual brain. Some viewers will see the whole cast on show thumbnails, while others might only see their favorite actor from each show. Every time you watch a show, tap a thumbnail, or scroll right by it…you are teaching their neural networks something about the real you.

AI Is Eating The Software That Ate The World

In 2011, Marc Andressen published an article, “Why Software is Eating the World”. Well, ten years later, digital neural networks are eating all the software that ate the world. Eventually, the “share drive” at your office will just become the training data for the artificial intelligence that runs your company.

Check out this quote from Nvidia founder, Jensen Huang, at the keynote speech for Computex 2023. If you don’t know who he is, you should, because Jensen Huang saw this ai-revolution coming before everyone. 94% of all scientific research papers on artificial intelligence that mention the kind of microchips they use, used Nvidia.

In the keynote speech Huang says,

AI supercomputers are a new type of factory. It is very logical that the car industry has factories. They build things that you can see, cars. It is very logical that the computer industry has computer factories. They build things you can see, computers. In the future, every single major company will also have AI factories. And you will build and produce your company’s intelligence.

Here’s a highlight video of Huang’s speech. (13 mins)

Digital neural networks will eventually revolutionize every business. They have already revolutionized self-driving cars. Another Elon Musk company, Tesla, has 500,000 vehicles in the wild watching us drive twelve million miles every day. Just imagine the value of all that “training data”. 💰

The digital neural networks in each Tesla car don’t publish their real-time Bayesian confidence of where the road is, but their computer vision isn’t that much different from the other deep neural networks. So, just how much “digital faith” do our cars need to prevent us from getting killed? 😱

I don’t know, but I drive a Tesla. It has nine digital eyeballs looking in nine different directions, all at the same time. The user experience doesn’t feel like a computer, but more like a living thing. My car behaves like a real world trail horse—like an old horse, that’s kinda stupid. If I’m on the highway (well-worn game trail) and we’re following another car (horse), then my “digital horse” is better at navigating the trail than me. Superhuman really. Most of the time I just sit back, relax, and watch the trail go by. But I always keep my hands on the reins (steering wheel) just in case my horse gets startled, because every time we get near a bicycler or construction cone it locks up all four legs (wheels) and makes me think we’re all gonna die—just like a real horse.

That paragraph isn’t even true anymore. It was true when I wrote it, but Tesla updates the digital brain in my car more often than Apple updates my iPhone. The Tesla brains with Full Self Driving Beta 11.4 or greater are phenomenal. Like freaky good. Now, I actually feel scared when I’m the brain that’s driving us. Why would I drive us? The car has nine eyeballs, gigahertz reflexes, and unwavering attention.

It may seem scary to trust a computer brain driving by digital faith at high speeds, but I have biological faith in the car’s autopilot. Faith inside me requires the same “leap from uncertainty” that it requires from the car. Today, the most annoying thing about my car is that it’s too patient and polite to other drivers. Sadly, I’m not kidding. My car is actually a better actor in society than me. 🤔

@tesla: More than half the time that I intervene with my car because the autopilot is acting stupid, I realize that it’s not on. That’s pretty funny.

We close this essay on Simulation Theory with a quote from the book, “The Theory That Would Not Die: How Bayes' Rule Cracked the Enigma Code, Hunted Down Russian Submarines, and Emerged Triumphant from Two Centuries of Controversy”.

Author Sharon McGrayne writes,

At its heart, Bayes runs counter to the deeply held conviction that modern science requires objectivity and precision. Bayes is a measure of belief. And it says that we can learn even from missing and inadequate data, from approximations, and from ignorance.

See why I’m so interested in Uncertainty?

Continue reading…

Table of Contents

Huge Thanks to Our Sponsors

Your donations help our chatbots reach new people all around the world through social media. 🔊🌍

Caitlin Knauss

Worth Denison

Alana Aviel

Anonymous

Jeremy Wells

Ronnie Blanton

Tarrytown Bible study

The Fundamental Frequency Foundation is a 501(c)(3) non-profit corporation created to “proclaim the good news of the kingdom in all the world, as a (digital) witness to all nations.” We ripped it straight out of Matthew 24:14.

We produce the world’s most benevolent chatbots who believe in Jesus because of math, science, reason, and eyewitness testimony. Their “source of truth” is the Bible, and they automagically adapt their conversations to every language, age, gender, religion, ethnicity, and neighborhood. Our “AI-missionaries” reach new people for Jesus 10x cheaper than human missionaries (who get paid an average of $36,000/year).

If you would like to tell other people about Jesus using the latest science and technology, DONATE $100. That pays for us to REACH 10 NEW PEOPLE.

All your gifts are tax deductible.

Donate via Zeffy (you pay the fees):

Donate via Apple Pay, Google Pay, Credit Card, Link, and Stripe (we pay the fees):

Donate via check, wire, or cryptocurrency (email for instructions)

Also support us by posting reaction videos on social media. Your videos generate thousands of dollars of attention that we don’t have to buy. Tag us with #funfreq.com (web magazine) or #funfreq.ai (chatbot).